In November 2023, Germany, France and Italy, three major players in the European Union, reached a groundbreaking agreement on the regulation of artificial intelligence (AI). As reported by Euronews, this agreement represents an important step in shaping the future of AI in the EU. Not only does it represent a joint effort by the major EU economies, but it is also a strategic step towards reconciling the rapid development of AI technologies with the necessary regulatory framework.

This consensus is particularly remarkable in the context of the EU’s general efforts to create a unified and effective approach to AI governance, highlighting the Union’s commitment to leading the global discussion in this critical and constantly evolving area. On December 9, 2023, representatives of the European Parliament and the Council reached an agreement on the AI Act after several days of debate. However, it still needs to be formally adopted by both the Parliament and the Council before it can come into force, expectedly in early 2024. France, Germany, and Italy were among the larger member states initially opposing the regulation, potentially threatening the efforts to pass the bill in the European Parliament.

The EU’s reputation as a regulatory powerhouse

In the broader context of AI regulation, the European Union has taken a leading role by navigating the complex interplay between promoting technological innovation and ensuring ethical governance. The EU’s proactive stance on AI regulation reflects its commitment to balancing technological progress with the protection of societal values. This approach has given the EU a leading role in regulation, though not without criticism regarding its effectiveness and inclusivity.

Germany, France, and Italy, as key players in the EU, have significantly shaped AI policy. Their agreement on AI regulation, which mandates self-regulation for Foundation Models, was a pivotal moment in EU AI governance and significantly influenced the shaping of the AI Act. The trinational agreement reflects a unified approach to regulating AI applications, not the technology itself, emphasizing the importance of model cards for transparency and accountability. Their cooperative stance also indicates a broader EU trend to integrate various national perspectives into a coherent regulatory framework. However, this influence of major economies on EU policy-making has sparked debates. Critics argue that these countries may prioritize their own interests or those of large tech firms, potentially overshadowing the collective needs of the EU. This situation highlights the challenge for the EU to maintain a unified approach to AI governance while considering the diverse interests of its member states.

The concept of mandatory self-regulation

The AI Act on AI regulation in the EU represents a significant shift in AI governance, particularly regarding the mandatory self-regulation of Foundation Models. These models, central to generating various AI outcomes, are now subject to regulation focusing more on their application than the technology itself. This is a crucial step towards greater transparency and accountability in AI development.

The concept of mandatory self-regulation introduces a nuanced approach to governance. By requiring Foundation Model developers to define “model cards,” the AI Act prescribes a level of self-assessment and disclosure previously absent. These model cards should provide comprehensive information about the functioning, capabilities, and limitations of AI models. This measure aims to demystify AI technologies for regulators and the public, leading to more informed decisions about their deployment and use.

However, this regulatory approach also raises concerns about its effectiveness and the potential burden on AI developers. The demand for detailed disclosure could be seen as an additional bureaucratic hurdle, particularly inhibiting innovation for smaller companies with limited resources. While the regulation aims to address this by extending mandatory self-regulation to all AI providers regardless of size, its practical impact remains to be seen.

Moreover, the balance between regulation and innovation is a delicate issue. The EU is traditionally seen as a regulatory leader, but there’s a risk that stringent regulations could stifle innovation in the fast-paced AI industry.

AI and society: understanding the EU’s ethical dilemma

Amnesty International has repeatedly highlighted risks to fundamental rights posed by AI technologies in the EU’s AI regulation process. Agnes Callamard, the Secretary-General of Amnesty International, emphasized that the dichotomy between innovation and regulation is misleading and often used by tech companies to avoid strict accountability. The organization points out the risks of AI in mass surveillance, policing, distribution of social benefits, and at borders, where AI technologies can amplify discrimination and human rights violations. Marginalized groups, such as migrants, refugees, and asylum seekers, are most at risk.

Thus, the EU’s regulatory approach faces a dilemma: it aims to promote innovation while ensuring that AI systems, especially those used in critical areas like public safety and welfare, adhere to strict transparency and accountability measures. The challenge is to create a robust legal framework that considers both ethical considerations and the dynamic nature of AI advancements.

AI and data protection

The EU’s AI Act, with its risk-based approach, follows a path known from data protection and the General Data Protection Regulation (GDPR): AI applications are classified into different risk categories with varying levels of risk. The higher the risk, the higher the regulatory requirements. Thus, the AI Act is seen as a complement to the GDPR in specific AI issues. However, consider this: more than half of the companies have seen new, innovative projects fail due to the GDPR, either because of direct requirements or due to uncertainties in interpreting the GDPR. In three out of ten companies, the deployment of new technologies like AI failed due to GDPR consequences.

Although the use of AI does not raise entirely new issues for data protection, the effort for companies remains high or will increase. This is due to extensive information and transparency obligations, ensuring the rights of affected individuals, and ensuring necessary technical and organizational measures.

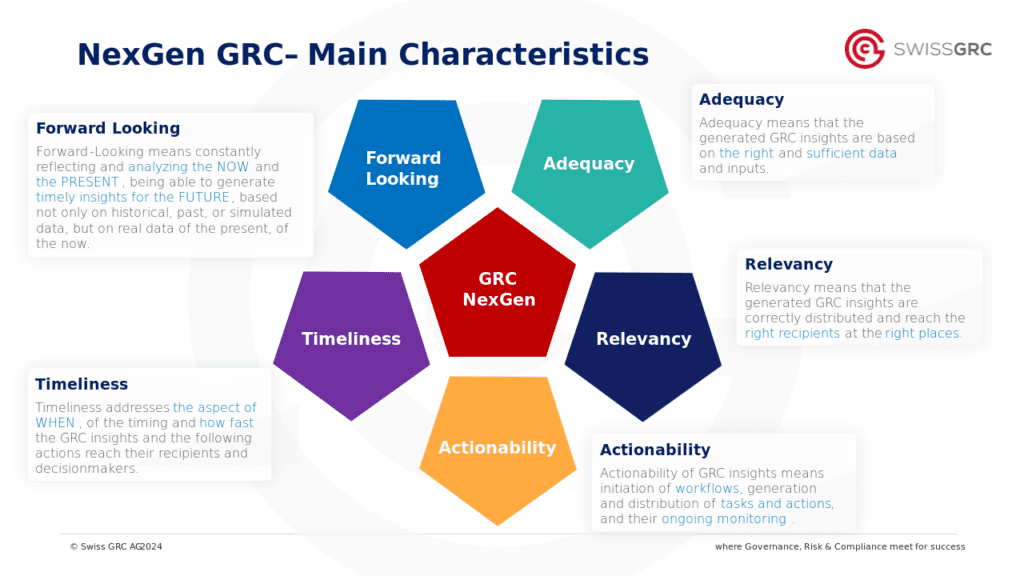

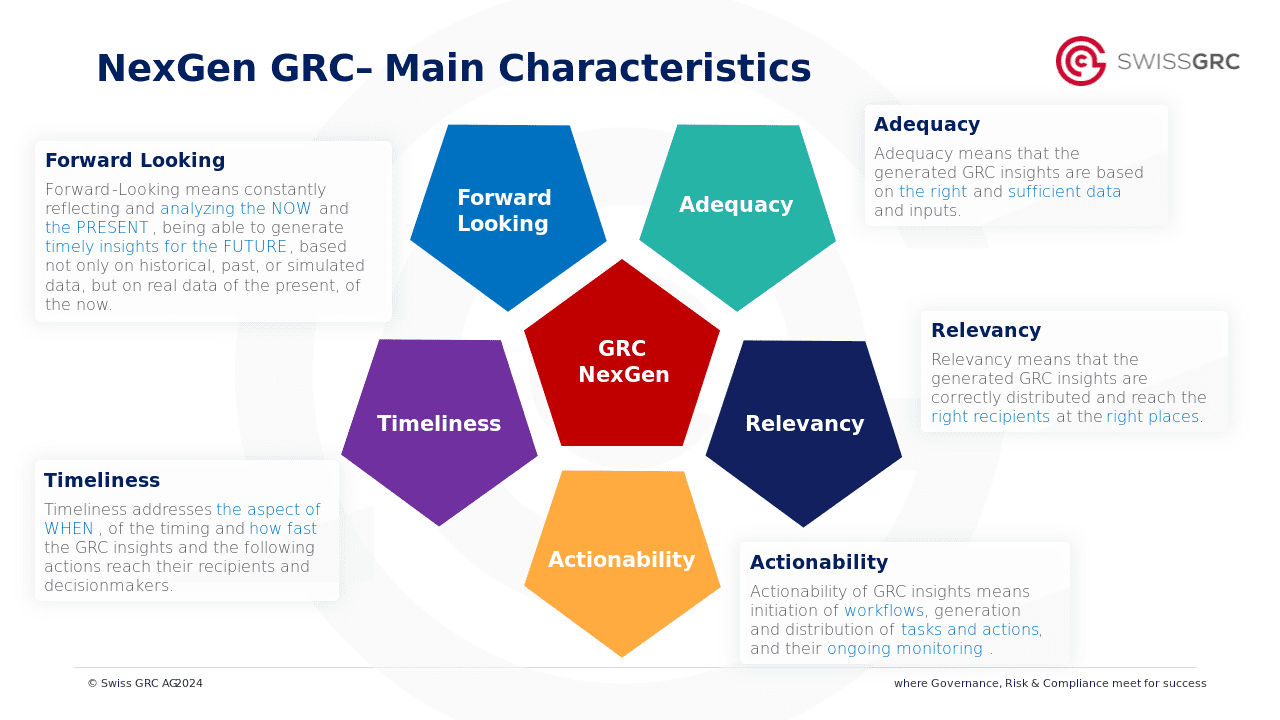

In this evolving scenario, the importance of Governance, Risk, and Compliance (GRC) becomes increasingly significant. Effective GRC strategies are not only essential to master these complex regulations but are also key to enabling companies to use AI opportunities responsibly and ethically. While the AI Act aims to mitigate risks associated with AI, it also underscores the need for robust and adaptable GRC frameworks. These frameworks are crucial to creating a harmonious balance between promoting innovation, complying with legal regulations, and ensuring ethical corporate governance in the rapidly developing field of AI technologies.

The EU approach in a global context

The AI Act will have significant global implications and could set a precedent for AI governance worldwide. It aims to establish a comprehensive AI regulation that can influence global standards and use the significant consumer market of the EU to trigger the so-called Brussels Effect. The AI Act covers AI systems used in sectors like aviation, automotive, and medical technology. Companies exporting to Europe must comply with these norms, leading to a broader adoption of EU regulations – the Brussels Effect. However, this influence could be mitigated by international companies and standard-setting bodies that set corresponding norms to the specific requirements of the AI Act. More likely, the regulation could inspire similar frameworks worldwide. As AI continues to evolve, the EU model, with its focus on a balanced relationship between innovation and regulation, could serve as a template for other countries grappling with the complexity of AI regulation. Consequently, the extent of the Brussels Effect and the role of the EU as a global regulatory leader in AI will evolve as other countries and international bodies engage with and respond to these regulations.

What to expect?

AI regulation is a crucial moment for the European Union, setting a new direction in AI governance. Its focus on mandatory self-regulation and application-based regulation could influence global AI policy, albeit with nuanced impacts on various sectors and AI systems. This agreement underscores the need for ongoing discussions and adjustments in AI regulation, especially given the rapid development of AI technologies and their societal impacts. However, concerns remain about finding a balance between innovation and ethical stewardship and ensuring that regulations are inclusive and effective, not hindering technological progress. The success of this regulatory approach will depend on its implementation and its ability to harmonize different interests within the EU and beyond. In the worst case, Europe could become a region where over-regulation hampers innovation in AI, creating a landscape where technological progress lags behind other global powers. This possibility underscores the urgent need for the EU to continuously refine its legal framework, ensuring it fosters innovation while protecting ethical standards and societal values.

Authors: Yahya Mohamed Mao (Swiss GRC AG), Michael Widmer (Swiss Infosec AG), January 2024

DE

DE